Earlier this year, I published an article going over how the senior living industry should be thinking about AI.

Since then, our product development team has spent countless hours running experiments, refining use-cases, and, most importantly, getting feedback from clinical and operational teams on what's actually working and driving impact.

Here's what we've learned: the most powerful AI in senior living isn't a chatbot. It's invisible infrastructure that surfaces the right information at exactly the right moment.

The paradox of using AI to deepen human connection is a great illustration of how we generally think about the role technology can play in senior living.

At August Health, our mission is for empowering the caregivers to deliver care, removing the mundane to focus on the essential.

At its core, care is person to person. Us humans aren't going anywhere.

Contextualization is Key

As we continue to distill, productize, and agentify [1] our work, everything we've built comes down to contextualized AI. [2]

That concept should be front of mind for anybody understanding and evaluating how AI will fit into our industry's workflows. It’s about feeding models with the right data to understand senior living, and surfacing output at the most helpful moment.

Our EHR is the front-end to an incredible repository of data. Over the last few years, our product's surface area has grown tremendously, from efficient paperless move-ins, to a best-in-class eMAR, to a delightful and robust billing platform. These products capture critical information about residents: their advanced directives, contact details, acuity and care needs, incidents, medications (and their administrations!), ADLs, invoices, and billing records.

Part of the magic of our platform is the ease with which we ensure this data gets appropriately collected. Care-teams delight in using tools that make their jobs easier, which leads to more complete usage and ultimately more comprehensive data. In an era of rapidly improving AI models, one of the reasons we're so bullish on AI is that our data is so rich. As W. Edwards Deming summed it up: "In God we trust; all others bring data." [3]

Applying AI models to this data is clearly resulting in almost magical results. But as we explore these use-cases, it's clear that there needs to be a lot of care and precision in making sure it's done appropriately.

Built-in Context

For example, even with a leading foundation model, an early experiment on task summarization made sweeping generalizations: ADL tasks were grouped with community compliance tasks and statuses, and due-dates were ignored, leading to an output that was largely useless, but still looked good at first glance.

It took many iterations with both prompt and context engineering to arrive at the intended goal: to understand a community's compliance posture at a glance.

In another example, the same model initially had trouble in summarizing a resident's historical incidents. It would raise alarm at benign observations and glossing over the important and clinically relevant details that were most helpful.

Dialing in the appropriate context and the lookback period (a balance between giving the model too much and too little historical data) and fine-tuning the model with industry-specific knowledge (a "fall" can be both a critical medical incident and a fun season for holidays) was key in arriving at a result that our early users have described as "jaw-dropping."

Another consideration is that many models push sycophantic suggestions and recommendations. [4] This is great for innocuous use-cases, but for sensitive ones, for example, reviewing medication lists for polypharmacy, a model might dangerously suggest removing some vital medications, perhaps ones that are being used in an off-label manner.

This matters because a health director's job is already impossible. We refuse to make it dangerous too.

We've done a great deal of work in guarding against these types of suggestions, focusing our model on doing what's most useful (like reviewing hundreds of thousands of medications) but without proffering potentially inaccurate or dangerous clinical recommendations.

Getting the Timing Right

Getting the machine context right is table stakes. But that's only half the equation. Even perfect data processing is worthless if we deliver insights at the wrong time or in the wrong format.

In my previous article, I introduced the concept of contextual AI, which is about "providing contextual information to the user, at the right time and place in their workflow".

In our team's work exploring this concept further, it's become clear that stateless, generative models allow for rapid iteration of different ideas. We can go from an off-hand comment made during a customer meeting to iterating different prompts and data contexts to explore various solutions in real-time (i.e., during the meeting).

A lot of our work above happens at this end of the axis: refining context, guiding outputs, and guarding against dangerous or unhelpful results. The internal tooling we've developed for this inquiry is an important part of pursuing AI thoughtfully and allows us to test, refine, and arrive at compelling solutions fast. Good tools matter. [5]

Once we've proven an AI insight is genuinely useful, we embed it directly into the workflows our teams already use. As you might expect, a lot of our initial work revolves around providing efficiency gains for clinical and operational tasks. Models are good at reviewing lots of unstructured data, which can save serious time for busy health and wellness directors.

Using AI to Deepen Human Connection

We've also been exploring use-cases around humanizing care, helping caregivers connect with as individual residents.

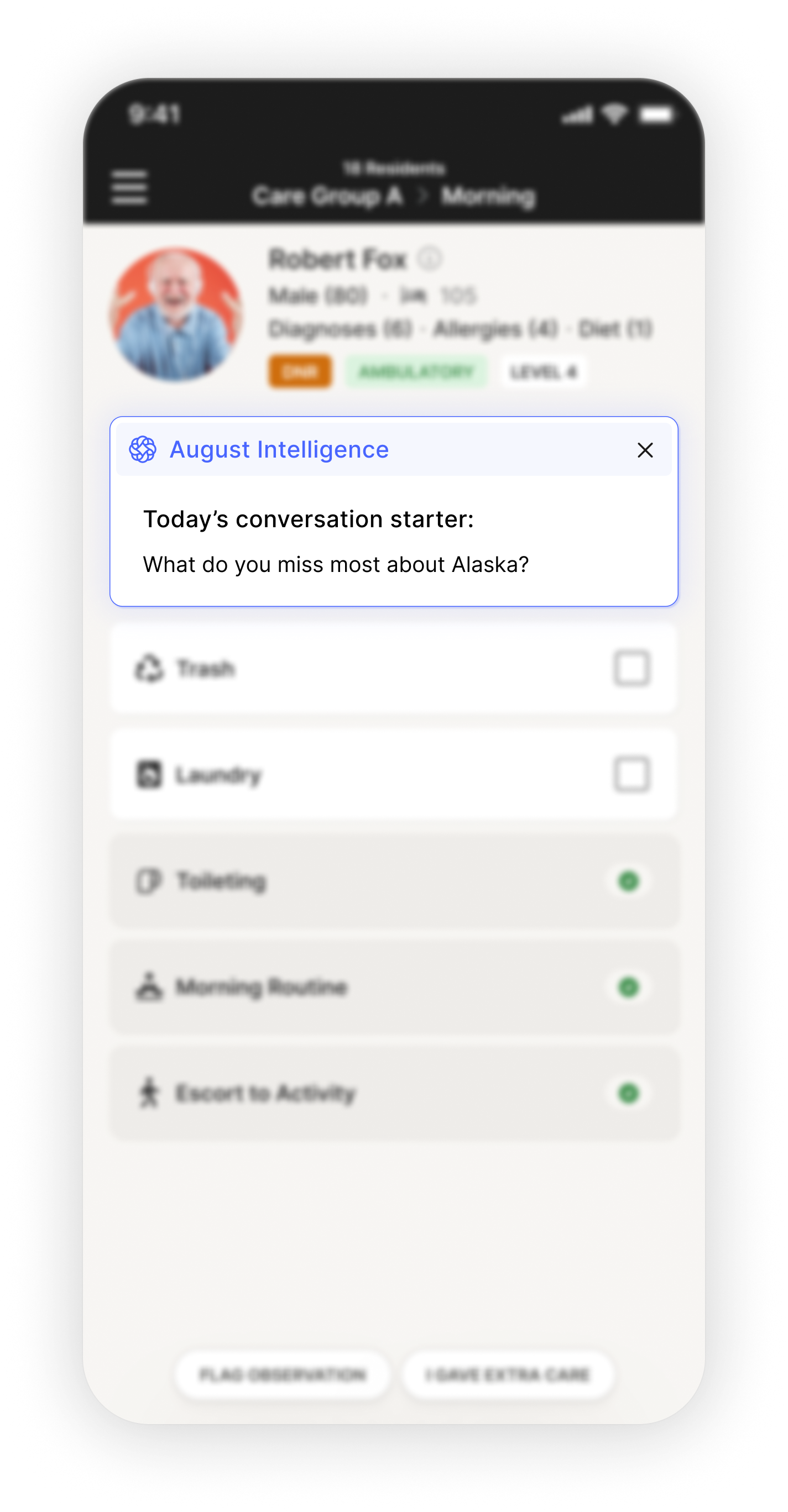

Here's where it gets really beautiful. We are able to provide dynamic conversation starters for caregivers in Care Track, our point-of-care solution. These rotating suggestions are guided by resident life histories and other sources, resulting in real meaning and human connection.

What was it like living in Denmark? What did you love most about it?

Based on: Background includes living in Copenhagen)

What was your favorite thing about being a military wife?

Based on: Lived on military bases in TX and CA

Tell me about your blind date with Henry. How did that go?

Based on: Met husband Henry on blind date arranged by friends

The conversation starters are just one example, but they illustrate something bigger about how we think about technology in senior living. Technology should make caregivers better at being human, not replace the humanity itself. That's the work.

What Comes Next

We're still in the early chapters of this story. Every week, we learn something new about what works and what doesn't.

But Deming was right. Now that we have real data, the patterns are unmistakable.

If you're evaluating AI for your communities, here's what matters:

- Can it surface the right information at the right time?

- Does it make your team's existing workflows better, or does it add new ones?

- And most importantly, does it give caregivers more time to be human, or less?

The goal isn't to build impressive AI demos. It's to give caregivers the tools and time to do what only humans can do: see people, not just residents. That's what good technology makes possible. And that's the future we're building toward.

Notes

- Agentifying? Angentification? See: https://www.anthropic.com/engineering/building-effective-agents

- The responsibility of storing and protecting this valuable data is one we don’t take lightly. (We’ve recently announced our SOC2 and HIPAA certifications: trust.augusthealth.com).

- Deming was an incredible polymath. Beyond his foundational work on statistics and management, he also played the flute, and drums, and composed music: https://en.wikipedia.org/wiki/W._Edwards_Deming

- Model syncophancy is a common problem for foundation models: https://openai.com/index/sycophancy-in-gpt-4o/.

- EHRs themselves are one of the most important examples where the right tools are mortally important: https://seniorshousingbusiness.com/the-power-of-system-design/

This article was originally published on LinkedIn.

.png)